If you can’t wait for the conclusion, please download the scientific paper titled Understanding and Predicting Image Memorability at a Large Scale right on. But wait, at the end of this post you can even test whether your photographs are memorable or not, based on the work of researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) who have created an algorithm that can predict almost as accurately as humans how memorable or forgettable an image is.

Would be kind of nice to know for sure how to frame and compose in order to make a photograph more memorable. A lot is about having an eye for it, and a lot is pure math. That at least is the result of an MIT study lead by Aditya Khosla, a computer science Ph.D student who’s heavily into computer vision. Says Khosla:

Understanding memorability can help us make systems to capture the most important information, or, conversely, to store information that humans will most likely forget. It’s like having an instant focus group that tells you how likely it is that someone will remember a visual message.

Such techniques are what drive Apple’s Siri, Google’s auto-complete, and Facebook’s photo-tagging, and what have spurred these tech giants to spend hundreds of millions of dollars on deep-learning startups.

Says the team’s principal research scientist Aude Oliva:

While deep-learning has propelled much progress in object recognition and scene understanding, predicting human memory has often been viewed as a higher-level cognitive process that computer scientists will never be able to tackle. Well, we can, and we did!

So… how does it work?

Well, they introduce a novel experimental procedure to objectively measure human memory, allowing them to build LaMem, the largest annotated image memorability dataset to date (containing 60,000 images from diverse sources). Using Convolutional Neural Networks (CNNs), they show that fine-tuned deep features outperform all other features by a large margin, reaching a rank correlation of 0.64, near human consistency (0.68).

Neural networks work to correlate data without any human guidance on what the underlying causes or correlations might be. They are organized in layers of processing units that each perform random computations on the data in succession. As the network receives more data, it readjusts to produce more accurate predictions.

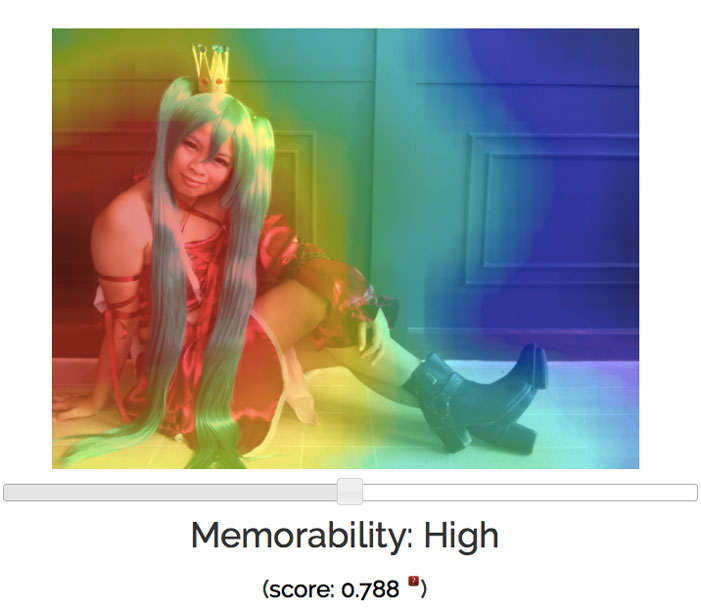

For each photo, the MemNet algorithm also creates a heat map that identifies exactly which parts of the image are most memorable:

What this all means?

Not only can a more memorable photographs potentially increase someone’s memory. It also provides a better understanding of the visual information that people pay attention to, meaning there’s a key message for photographers, marketers, movie directors and other content creators: to better tailor visual content for individual targeted audiences and industries, even such as retail clothing, logo design and, yes, good old excellent photography.

You can try out LaMem online by uploading your own photos right here. And? Memorable stuff?

What’s up next for the research team? They plan to turn it into an app that subtly tweaks photos to make them more memorable.

Being at it, you also might want to check out Khosla’s GazeFollow, another images-based computer vision project allowing to understand what people are thinking, and what they might do next…