By JAWAD RIACHI

I’ve recently downloaded DxO Optics Pro (demo version) just for the sake of experimenting with the software on some photos I shot with a small Micro Four Thirds camera. The camera I used had a fairly sharp wide angle lens (14mm F2.5). When I uploaded the photos into the software, DxO Optics Pro analyzed the photos, connected to the Internet and downloaded the profile of the lens without any interference from my end! I have to admit that this was impressive. It is not rocket science, but it was impressive to see a piece of software taking advantage of the EXIF data in the photos and working accordingly. I did not have to specify anything! It just worked.

Having a background in software engineering, I decided to closely examine the EXIF data structure and learn more about it beyond the usual shutter speed, aperture, ISO and focal length. It turns out that the data is fairly extensive…

Later on, I landed on a Samsung NX300 2D|3D camera which I had heard about but never had a chance to play with. Not being a fan of 3D images, I usually don’t pay too much attention to these types of cameras and I believe many photographers feel the same way I do. 3D cameras have struggled in finding support between photographers. On the other hand this one grabbed my attention because it looked like a regular camera except for a 3D | 2D button on the lens.

It turns out that this lens has two built-in shutters which are activated when shooting in 3D mode. These shutters slightly change the perspective of the captured image in order to create a stereoscopic photograph (using parallax). It was not perfect but it was enough to create sense of depth.

This means that for every shot the camera will take three. The first is the original shot and the remaining two are used to create the Z-map.

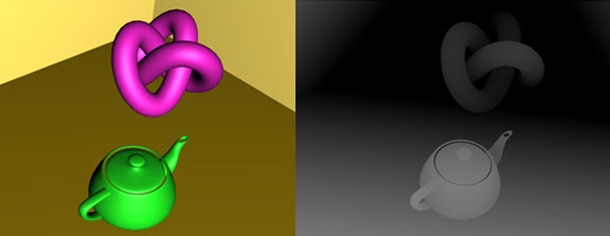

A Z-map is a grayscale image representing the depth of the objects within the frame. White is close to the lens and black is infinity.

Having such data in the EXIF segment is invaluable during post-processing. Photo manipulation software see the photos as flat 2D matrices of RGB values. A Z-map will provide a Z-coordinate for every pixel in the matrix.

Such data is not generated at the moment and software like Photoshop try to make a guess at it. The spot healing tool in Photoshop works by examining the neighboring pixels to cover the spot. If the spot is on the edge of an object, Photoshop grabs the wrong pixels and the end result is worse than having a spot.

A film simulation software can work more efficiently and would produce more accurate results if a Z-map is available since light roll off on film tends to be different than light roll off on a sensor.

A lens blur filter and depth of field simulating what a real camera would see are other examples which relies on Z-maps to work efficiently. A Z-depth map can also be used as a control layer for 3D matte extract, depth matte, 3D fog, ID matte and various other effects.

Due to the fact that the Z-map is derived from two photos shot in sequence with a slight offset of time and perspective, a confidence level of the Z-map should be inserted into the EXIF data in order to be taken under consideration by the manipulation algorithms. This parameter could also be used as an indicator of depth performance of future cameras.

For more on three-dimensional Z-depth maps and related computer graphics visit CGArena.