A series of interesting recent articles makes you think whether it will be worth in the future to pay big money for good lenses — given the fact that digital imaging is more and more about in-camera algorithms and post-processing. A digital image is nothing but a numeric, normally binary representation and therefore interpretation. Yes, each lens has a unique character. But technology is advancing rapidly. Take a software being developed by a research project called High-Quality Computational Imaging Through Simple Lenses at the University of British Columbia; a project that’s able to turn technically crappy images into high quality. Meaning: they use a cheapo lens for high-end results.

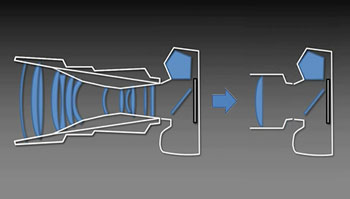

Good lenses are complex, expensive, large and difficult to manufacture and maintain. Now this project suggests to replace complex optics by simple lens elements plus a set of computational photography techniques to compensate for the quality deficiencies.

Naturally, such an approach is much cheaper and lenses are much smaller and lighter.

Have a look at some comparison samples here. A cheap single planoconvex lens was used at F4.5 — move your cursor over the image to see the difference.

More in this project video:

Promising start. Geek talk for now. The technique won’t be ready for the market for some time to come. Because they calibrate the cheap lens using a test pattern to estimate the point source functions (PSFs) for the lens, which is a measure of how a point is distorted/blurred by the lens.

They use this understanding to try and reconstruct the photo that would have been taken if not for the softness (spherical distortion) of the lens. This is unlike a normal sharpening filter, which just applies a convolution to try and emphasize areas of contrast, not recover the original image.

There are some limitations of this approach — the biggest one being that the technique now only accommodates for PSFs of objects taken at a specific distance from the sensor, which can lead to reconstruction errors/image artifacts. PSFs are different for objects nearer or closer to the camera than whatever range the lens was calibrated for.

And the system runs into issues if the aperture gets any more open than F2 because the blur kernels get too large.

What this means for the future of camera optics? Advancing technology will enable camera makers to alter formerly unshakable physical and optical parameters, allowing them to build smaller, lighter and faster lenses.